This is a guest post submitted by Paul A. Djupe, Denison University Political Science, who is an affiliated scholar with PRRI ; Amy Erica Smith, Assistant Professor of Political Science at Iowa State University; and Anand Edward Sokhey, Associate Professor of Political Science at the University of Colorado at Boulder, as well as the associate director of the American Politics Research Lab and the incoming director of the LeRoy Keller Center for the Study of the First Amendment.

Google “peer review crisis,” and you will find dozens of pieces — some dating back to the 1990s —lamenting the state of peer reviewing. While these pieces focus in part on research replicability and quality, one major concern has been shortages in academic labor. In one representative article, Fox and Petchey (2010) argue that peer reviewing is characterized by a “tragedy of the commons” that is “increasingly dominated by ‘cheats’ (individuals who submit papers without doing proportionate reviewing).” Other commentators describe the burden faced by “generous peer reviewers” who feel “overwhelmed.”

So where does peer review in the social sciences stand? Are academics overburdened altruists or peer-review free-riders? Our new Professional Activity in the Social Sciences data set suggests the answer is “Neither.” Instead, most academics get few peer review requests and perform most of them. Reviewing is strongly correlated with academic productivity—research-productive scholars get more requests and perform more reviews. However, the ratio of peer reviews performed to article submissions is also lower for more productive scholars. Overall, this would appear to be a bit of a mixed bag from the perspectives of equity and representation in the academy. Here are the details.

In March, 2017 we surveyed 900 faculty in political science departments across the United States, asking about a range of professional activities. In June we followed up with a second group of social scientists, surveying 958 faculty in U.S. sociology departments. More details about the design and sample are available here.

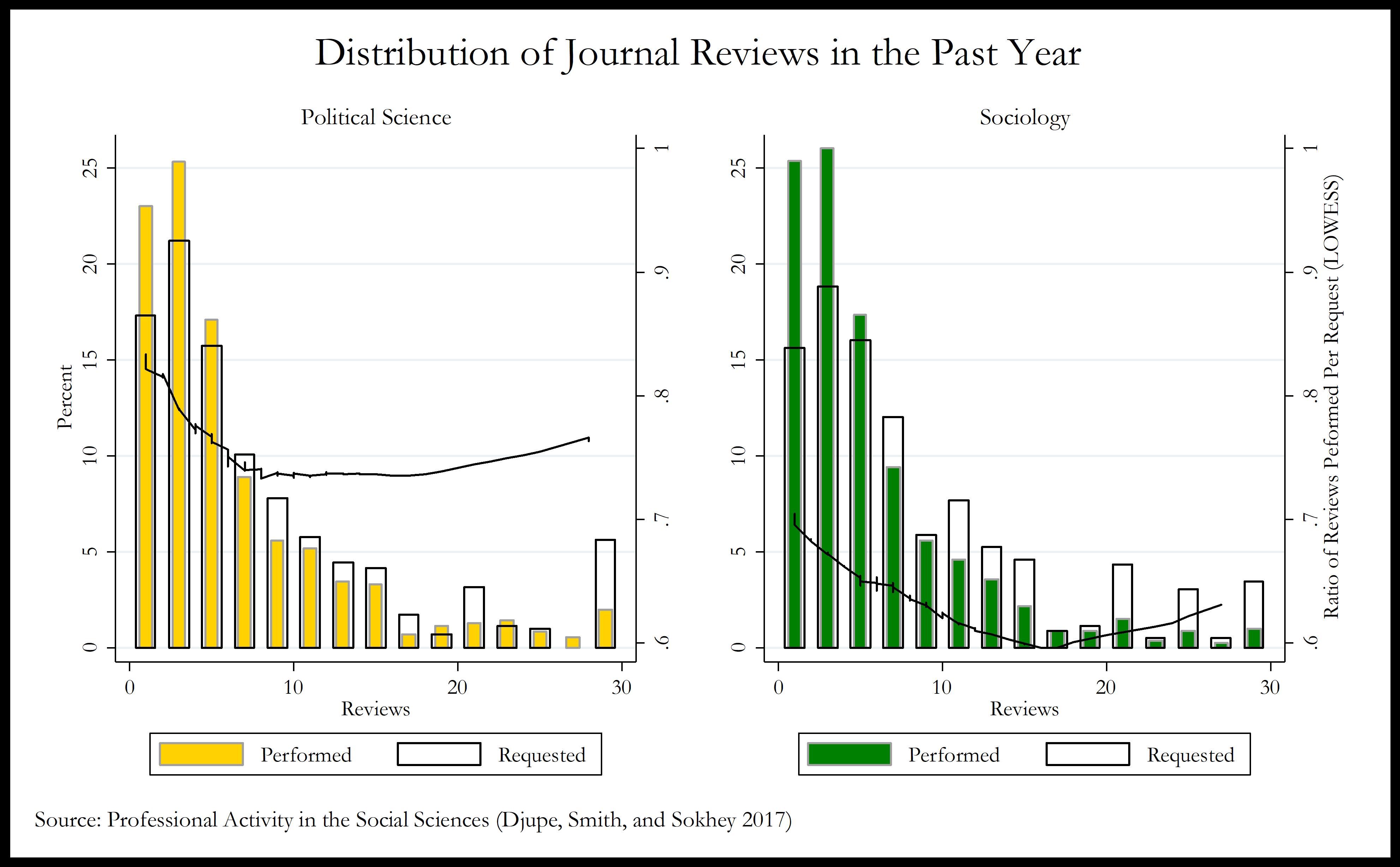

The figure below suggests that the peer review crisis may be overblown. The median political scientist and median sociologist in our data each report receiving five review requests a year. The median political scientist completes four of them; the median sociologist three.

At the same time, some social scientists do indeed receive a lot of requests. In both disciplines, the most sought-after decile of peer reviewers receives 20 or more requests per year. Not surprisingly, as the lowess smoothed curves in the figure show, people who get many requests are less likely to say “yes” to all of them. However, the negative relationship is not as strong as one might expect; many people accept reviews at the same rate regardless of how many requests they receive. And intriguingly, there is some evidence that at the high end the most in-demand reviewers are actually more prone to accept requests than are reviewers who are in moderate demand. This point would seem to echo a recent article in Nature, where it was noted that a small proportion of peer reviewers do the “lion’s share of the work.”

What drives this tremendous inequality in peer reviewing? That is, why do a small proportion of potential reviewers end up with so many requests? It turns out the answer is strongly tied to academic productivity. People who submit and publish more work get asked to review more.[1] The fact that the most visible—and probably vocal—individuals in the two disciplines get the most review requests certainly exacerbates the perception of a crisis.

So what would a more equitable distribution of reviewing look like? As the authors of this piece, we had a rousing and unresolved debate over the meaning of equity in reviewing. Two different approaches yield almost diametrically opposed answers.

Equity might mean more evenly distributing reviewing labor. In sociology, 18% of peer reviewers do half of peer reviews; in political science, 16% of reviewers do half the work. Sharing the burden more evenly would not only ease up pressures on the individuals some have called “peer review ‘heroes’” (Kovanis et al. 2016). It could also improve social science by reducing the role of gatekeepers and by expanding the voices influencing published social science research.

Another approach to equity focuses on proportionality. One might argue that individuals who submit more articles to journals should expect to complete more reviews. In an intriguing 2014 analysis of his own “journal review debt,” Ben Lauderdale outlines a simple logic for assessing whether one is a net debtor or creditor in peer reviewing: peer review debt is the difference between the number of reviews one has written and the number one has “caused to be written.” Lauderdale computes an “author-adjusted” statistic, dividing the number of reviews received for a given article by the number of authors on the article. Similarly, Fox and Petchey (2010) propose a form of currency (the ‘PubCred’) earned by performing reviews that can be used to submit articles.

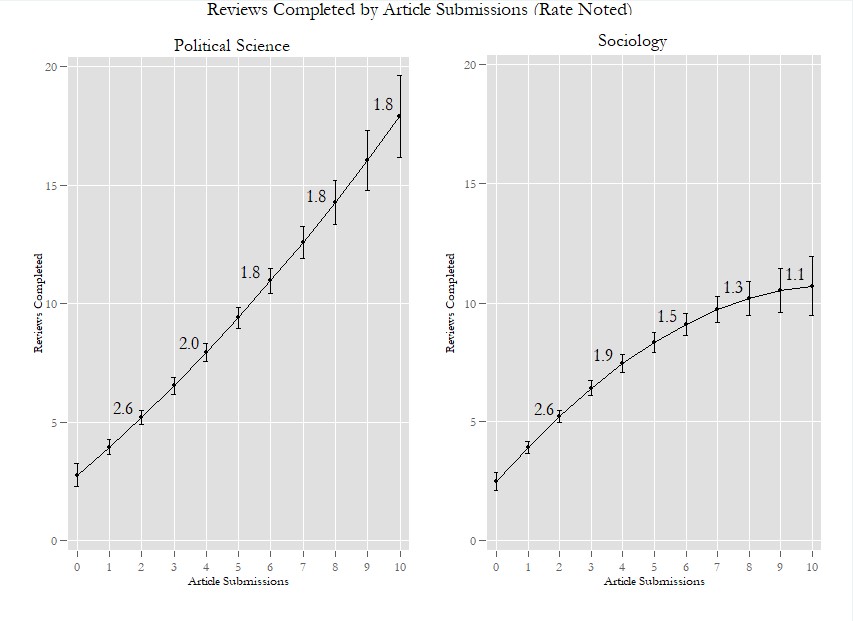

In the figure below we return to our survey data, plotting the ratio of completed reviews to article submissions in the past year. Following Lauderdale’s logic, an “equitable” distribution would require each individual to perform the number of reviews their work received, divided by the average number of authors on their work.

By the standard of proportionality, scholars who submitted no research in the past year but performed reviews are the most generous. In Lauderdale’s terms, they are the largest creditors. Without this group—comprising 25% of sociologists and 23% of political scientists—the peer review machine would grind to a halt. Of course, these individuals may be paying back debts they accumulated in the past. Similarly, we estimate that those who submitted just one article in the past year have a “credit” for the past year of between 1.1 and 1.9 reviews in sociology, and between 1.0 and 1.7 reviews in political science. [2]

By contrast, those who submit a lot of work to journals tend to be in the hole. Depending on our modeling assumptions, the predicted balance turns to the red, on average, somewhere between 2 and 5 submissions in both disciplines.

Surprisingly, the two disciplines diverge in the behavior of their most productive scholars. The most productive political scientists are more “generous” than are political scientists with moderate levels of productivity, and in some models their reviewing balance becomes net positive. By contrast, the most productive sociologists are also the ones who owe the greatest number of peer reviews over the past year.

So which is it? Does the right tail of the distribution consist of “cheats” who free-ride on others’ labor? Or are these scholars “heroes”? Both frames are justified by these results. The problem comes down to the fact that research time and publishing activity are quite unevenly distributed in academia, with downstream consequences for peer reviewing. Encouraging a more egalitarian distribution of peer reviewing can democratize knowledge production, yet it can also impose a burden on faculty with high teaching loads if not accompanied by appropriate institutional incentives. The recent Publons project is an example of one attempt to create a form of recognition for peer review. We can only begin to make headway on problems with peer review from a position that is well informed by accurate data. Documenting and rewarding peer review is a great step in that direction.

NOTE: This post was submitted to Inside Higher Education as a potential op-ed on October 22, 2017; while it was not published by IHE, the research was discussed extensively in a news article published on October 24, 2017.”

[1] In simple Ordinary Least Squares models, a scholar’s number of submissions and publications in the past year explain 19.8% of the variance in review requests in sociology, and 18.4% in political science; not to mention 17.0% of the variance in reviews performed in sociology, and 23.1% in political science.

[2] To calculate credit/debt, we adjust the results from Figure 2 by the estimated number of authors per submission and the estimated number of reviews received per submission (calculations available on request). Plausible assumptions about how many reviews each submission receives are hard, given differing journal practices for desk rejections, number of first round reviews, and resubmissions. We use the ratio of the total number of reviews our respondents said they produced to the total, author-adjusted number of reviews they “consumed” or “caused.” We also produce estimates using a more conservative assumption of four reviews per submission. We adjust for the fact that in both disciplines, people who submit more articles have, on average, more coauthors per submission (reducing their “debt”). We have data only for the numbers of coauthors on respondents’ most recent submission, which we assume is representative.

Steve Saideman is Professor and the Paterson Chair in International Affairs at Carleton University’s Norman Paterson School of International Affairs. He has written The Ties That Divide: Ethnic Politics, Foreign Policy and International Conflict; For Kin or Country: Xenophobia, Nationalism and War (with R. William Ayres); and NATO in Afghanistan: Fighting Together, Fighting Alone (with David Auerswald), and elsewhere on nationalism, ethnic conflict, civil war, and civil-military relations.

0 Comments