When it comes to quantitative data in conflict studies, standards for collection, reliability, ethics, and usage remain behind the curve. We discuss five things that scholars can do to address these gaps.

When it comes to quantitative data in conflict studies, standards for collection, reliability, ethics, and usage remain behind the curve. We discuss five things that scholars can do to address these gaps.

It's happened to all of us (or least those of us who do quantitative work). You get back a manuscript from a journal and it's an R&R. Your excitement quickly fades when you start reading the...

There’s an interesting debate going on over at openGlobalRights. Drawing on their recent Social Problems article, Neve Gordon and Nitza Berkovitch provocatively accuse human rights quantitative...

Welcome to the second edition of "Tweets of the Week." It was a busy seven days for news and my twitter feed provided much useful information -- in micro-form. The Scottish independence referendum...

One of the recurring subjects among folks using data is: why does person x not share their data with me? Mostly because they are fearful and ignorant. Fearful? That their work will get scooped and/or their data might be found to be problematic. Ignorant? That they don't know that they are obligated to share their data once they publish off of it and that it is in their interest to share their data. There is apparently a belief out there that data should be shared only after the big project is published, not after the initial work has been published. I will address this as well as the...

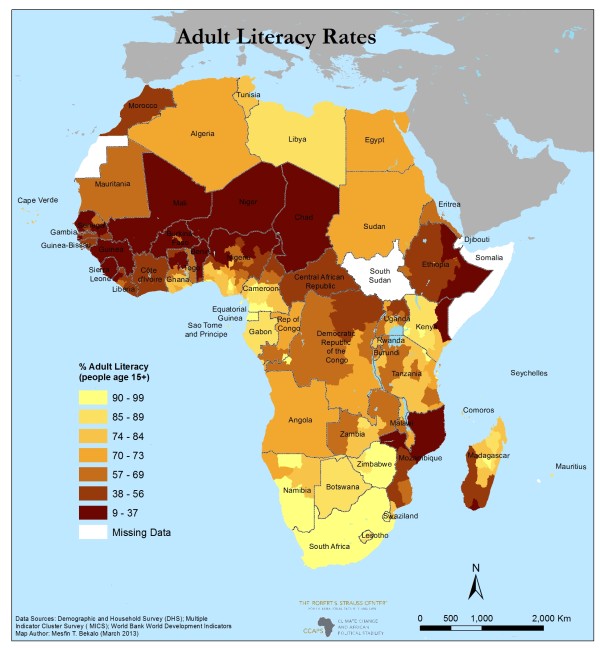

Todd Smith, Anustubh Agnihotri, and I have put together a new resource of subnational education and infrastructure access indicators for Africa, released as part of the Climate Change and Africa Political Stability (CCAPS) program at the University of Texas. This dataset provides data on literacy rates, primary and secondary school attendance rates, access to improved water and sanitation, household access to electricity, and household ownership of radio and television. The new CCAPS dataset includes data for 38 countries, covering 471 of Africa’s 699 first-level administrative districts. ...

In the Guardian this morning, Christof Heyns very neatly articulates some of the legal arguments with allowing machines the ability to target human beings autonomously - whether they can distinguish civilians and combatants, make qualitative judgments, be held responsible for war crimes. But after going through this back and forth, Heyns then appears to reframe the debate entirely away from the law and into the realm of morality: The overriding question of principle, however, is whether machines should be permitted to decide whether human beings live or die. But this "question of principle"...

I often encourage my students to distill complex analytical concepts into terse, plain English. But some things can't be boiled down to a tweet, as I discovered this week when attempting to explain Cingranelli-Richards data coding in response to Joshua Foust's queries on my abusers' peace post.What I didn't think to tell him in response to his original question was: here is how you can look it up for yourself. So this post contains (I hope) a better answer to Josh's question but also a brief primer on the CIRI dataset, what it contains and how to use it. I should add that I've never used it...

OK, OK, OK, I know life is short and some of us need to get a life (I'm not in Seattle by the way), but this is a really cool app from Uppsala: From the iTunes description: "Data on 300 armed conflicts, more than 200 summaries of peace agreements, data on casualties etc, without having access to the Internet." I actually do think this is really cool and I can see real benefits to having this type of data at one's fingertips, but I do wonder how these dramatic changes in the ease of access to select types of data and data summaries (even from reputable places like Uppsala) will alter research...

I just finished watching a video of CrowdFlower's presentation at the TechCrunch50 conference. CrowdFlower is a plaform that allows firms to crowdsource various tasks, such as populating a spreadsheet with email addresses or selecting stills from thousands of videos that have particular qualities. The examples in the video include very labor intensive tasks, but tasks that a firm is not likely to either need again or feels is worth dedicating staff to.As I was watching the video I thought about the potential to leverage such a platform for large-scale coding of qualitative data. In the...

It is an appropriately gloomy day here in Manhattan, as the city and the country remembers the horror of September 11th, 2001 and attempts to continue to collectively heal. For me, part of that healing process has been trying to understand what happened, and more importantly, how to prevent it from ever happening again. Over the past eight years many others have been moved to investigate and analyze these events, which has lead to a plethora of research on 9/11—some good, some not so good.As someone who attempts to read everything that comes across my desk related to these attacks, I thought...