According to a new survey I’ve just completed, not great. As part of my ongoing research into human security norms, I embedded questions on YouGov’s Omnibus survey asking how people feel about the potential for outsourcing lethal targeting decisions to machines. 1000 Americans were surveyed, matched on gender, age, race, income, region, education, party identification, voter registration, ideology, political interest and military status. Across the board, 55% of Americans opposed autonomous weapons (nearly 40% were “strongly opposed,”) and a majority (53%) expressed support for the new ban campaign in a second question.

According to a new survey I’ve just completed, not great. As part of my ongoing research into human security norms, I embedded questions on YouGov’s Omnibus survey asking how people feel about the potential for outsourcing lethal targeting decisions to machines. 1000 Americans were surveyed, matched on gender, age, race, income, region, education, party identification, voter registration, ideology, political interest and military status. Across the board, 55% of Americans opposed autonomous weapons (nearly 40% were “strongly opposed,”) and a majority (53%) expressed support for the new ban campaign in a second question.

Of those who did not outright oppose fully autonomous weapons, only 10% “strongly favored” them; 16% “somewhat favored” and 18% were “not sure.” However by also asking respondents to explain their answers, I found that even those who clicked “not sure,” as well as a good many “somewhat favor”-ing respondents, actually have grave reservations and lean in favor of a precautionary principle in the absence of information. For example, open-ended answers among the “not sure” respondents who claimed to “need more information” included the following:

“Generally, without additional info, I’m opposed. Sounds like too much room for error. Or the terminator in real life.”

“It seems like ideas with good potential eventually end up in the hands corrupt or dangerous people with bad intentions.”

“I have no concrete opinion, but I believe that it is dangerous enough with a human controller, let alone a robot.”

“Not sure, don’t know, really don’t think it’s a good idea…”

“I don’t know enough about this subject to have a fully formed opinion. It sounds like something I would oppose.”

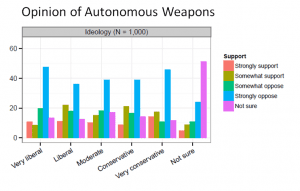

As these break-out charts detail, this finding is consistent across the political spectrum, with the strongest opposition coming from both the far right and the far left. Men are slightly more likely than women to oppose the weapons; women are likelier to acknowledge they don’t have sufficient information to hold a strong opinion. Opposition is most highly concentrated among the highly-educated, the well-informed, and the military. Many people are unsure; most who are unsure would prefer caution. Very few openly support the idea of machines autonomously deciding to kill.

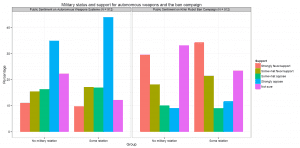

Interestingly, of the 26% of Americans who at least “somewhat support”ed the idea of man-out-of-the-loop weapons, the most common reason given in open-ended answers coded so far (about half the data-set) is “force protection” – the idea that these weapons will be beneficial for US troops, and many of those who opposed an NGO-run ban campaign say that only the military should be making these kinds of decisions. In that sense, it is interesting to note that military personnel, veterans and those with family in the military are more strongly opposed to autonomous weapons than the general public, with the highest opposition among active duty troops. (Click the graph to enlarge.)

Interestingly, of the 26% of Americans who at least “somewhat support”ed the idea of man-out-of-the-loop weapons, the most common reason given in open-ended answers coded so far (about half the data-set) is “force protection” – the idea that these weapons will be beneficial for US troops, and many of those who opposed an NGO-run ban campaign say that only the military should be making these kinds of decisions. In that sense, it is interesting to note that military personnel, veterans and those with family in the military are more strongly opposed to autonomous weapons than the general public, with the highest opposition among active duty troops. (Click the graph to enlarge.)

I’ll have more presently on some of the more detailed findings of this study, as well as why it may matter in terms of the legal parameters of debate as the Campaign to Stop Killer Robots picks up speed. Meanwhile, happy to hear feedback as I’m new to survey research and will want to draw on some of these findings in a new paper I’m writing.

Charli Carpenter is a Professor in the Department of Political Science at the University of Massachusetts-Amherst. She is the author of 'Innocent Women and Children': Gender, Norms and the Protection of Civilians (Ashgate, 2006), Forgetting Children Born of War: Setting the Human Rights

Agenda in Bosnia and Beyond (Columbia, 2010), and ‘Lost’ Causes: Agenda-Setting in Global Issue Networks and the Shaping of Human Security (Cornell, 2014). Her main research interests include national security ethics, the protection of civilians, the laws of war, global agenda-setting, gender and political violence, humanitarian affairs, the role of information technology in human security, and the gap between intentions and outcomes among advocates of human security.

I am sanguine about autonomous weapon systems per se, and they have been in operation for many years in the guise of mines, IEDs, and more recently smart munitions. More sophisticated autonomous weapon systems have the potential to be more discriminating than soldiers: imagine a system that responds only to being attacked. Conversely, the Western development of such systems merely reinforces western military strengths (how much more accurately, efficiently and safely can we deliver precision munitions?) without compensating for western strategic weaknesses: the targets of our weapons have long contended with our military superiority.

What John says. Dr. C., isn’t this kind of a strawman? Passive/defensive autonomous systems have been around a long time, but I really haven’t seen much evidence that autonomous offensive weapons (“killer robots”) are on the assembly line. Unmanned drone strikes have plenty of people in the loop, so the real question seems to be how much human error we are willing to tolerate.

I don’t think campaigners are claiming these weapons exist now – they are trying pre-empt development/deployment of a technology that is likely coming down the pipeline and which they argue would present ethical/legal problems if deployed. Campaigners would argue that the “real question” here is whether humans should outsource lethal decisions to machines, which is really a whole separate issue than the question of how to lawfully use man-in-the-loop weapons like drones. As for the drone debate (a completely different debate in my mind) I really don’t think the key issues have to do with human error at all but rather with flagrant violations of international law on targeting. That said, the problem with drones is not the weapons themselves but the way they are used. By contrast, I think anti-killer-robot campaigners are arguing that the weapons themselves would not meet international law standards, and in this survey I primed respondents to think specifically about future autonomous weapons not drones.