Another day, another piece chronicling problems with the metrics scholars use to assess quality. Colin Wight sends George Lozano’s “The Demise of the Impact Factor“:

Using a huge dataset of over 29 million papers and 800 million citations, we showed that from 1902 to 1990 the relationship between IF and paper citations had been getting stronger, but as predicted, since 1991 the opposite is true: the variance of papers’ citation rates around their respective journals’ IF [impact factor] has been steadily increasing. Currently, the strength of the relationship between IF and paper citation rate is down to the levels last seen around 1970.

Furthermore, we found that until 1990, of all papers, the proportion of top (i.e., most cited) papers published in the top (i.e., highest IF) journals had been increasing. So, the top journals were becoming the exclusive depositories of the most cited research. However, since 1991 the pattern has been the exact opposite. Among top papers, the proportion NOT published in top journals was decreasing, but now it is increasing. Hence, the best (i.e., most cited) work now comes from increasingly diverse sources, irrespective of the journals’ IFs.

If the pattern continues, the usefulness of the IF will continue to decline, which will have profound implications for science and science publishing. For instance, in their effort to attract high-quality papers, journals might have to shift their attention away from their IFs and instead focus on other issues, such as increasing online availability, decreasing publication costs while improving post-acceptance production assistance, and ensuring a fast, fair and professional review process.

The story of the Impact Factor echoes those of many poorly designed metrics: it doesn’t seem to matter much how good the metric is, so long as it involves quantification. Institutions use impact factor as a proxy for journal quality in the context of library acquisitions, tenure and promotion, hiring, and even, as Lozano notes, salary bonuses.

At some institutions researchers receive a cash reward for publishing a paper in journals with a high IF, usually Nature and Science. These rewards can be significant, amounting to up to $3K USD inSouth Korea and up to $50K USD inChina. InPakistan a $20K reward is possible for cumulative yearly totals. In Europe andNorth America the relationship between publishing in high IF journals and financial rewards is not as explicitly defined, but it is still present. Job offers, research grants and career advancement are partially based on not only the number of publications, but on the perceived prestige of the respective journals, with journal “prestige” usually meaning IF.

For those readers who don’t know what “Impact Factor” is, here’s a quick explanation: “a measure reflecting the average number of citations to recent articles published in the journal.” We’ve discussed it at the Duck a number of times. Robert Kelley once asked, more or less, “what the heck is this thing and why do we care?” I passed along Stephen Curry’s discussion, which includes the notable line “If you use impact factors you are statistically illiterate.” I’ve also ruminated on how publishers, editors, and authors are seeking to exploit social-media to enhance citation counts for articles. In short, Impact Factor is a problematic measure even before we get into how easy it is to manipulate, i.e., by encouraging authors to cite within the journal of publication.*

In this sense, Impact Factor is similar to — and implicated in — many of the other proxies we use to assess quality in academia: publications in top peer-reviewed journals, rankings of academic presses, citations counts, and so forth. They’re all badly broken. And enough of us know that they’re badly broken such that we’re in the zone of organized hypocrisy. I am willing to sacrifice some degree of accuracy to reduce bias in the short-term, while pushing for change over the medium-term.

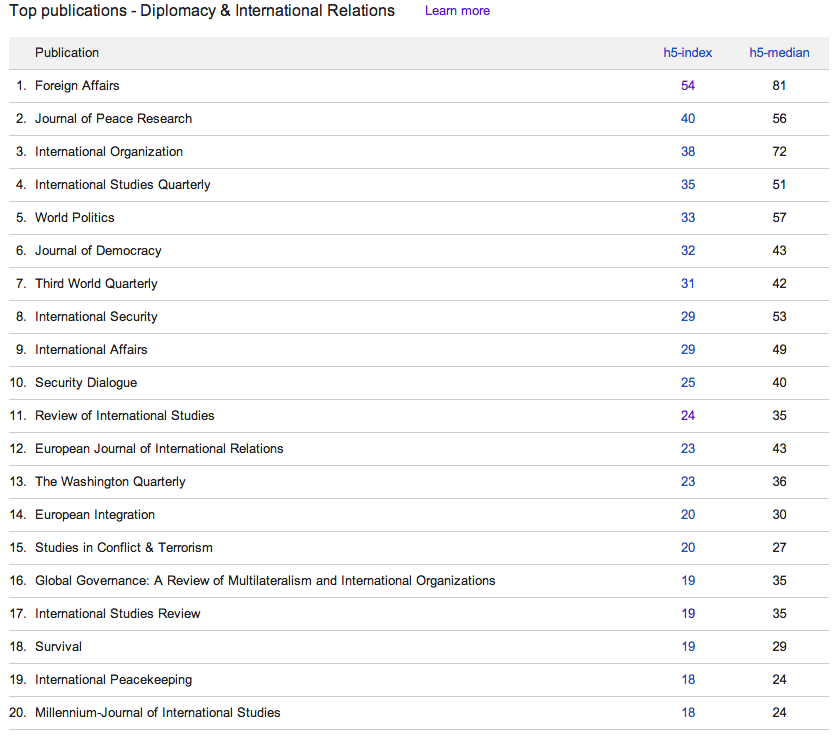

With respect to Impact Factor, there is no shortage of alternatives. We’re seeing a variety of different directions (via) to the evaluation of journal and scholarly impact. In terms of the former, the most conservative approach on the table is to adopt an h-index approach. The fact that Google Scholar provides h-5 index data for journals doesn’t hurt. For example, here are their current rankings in international relations:

Readers will note that, in addition to all of the problems that plague Google Scholar’s database, the folks who run it should probably consult with more academics to construct their categories. The Journal of Conflict Resolution has an h5-index of 38, but doesn’t appear here.

My own view is that, if we’re going to rely on some kind of citation-count, we should be doing moderately more sophisticated things. I know that a number of my friends and I already use IF and h-index data as a rough yardstick for individual articles. For example, we will look at whether an article’s citation count is higher or lower than the relevant IF for the journal it appeared in.

In general, I think that this kind of comparative analysis makes sense. We should be assessing both articles and journals in terms of marginal value, e.g., asking what the “citation premium” of appearing in International Organization is versus various lower-ranked journals. While there are significant statistical challenges to this kind of analysis, they aren’t all that different from ones that we normally confront in our research. Many of the relevant control variables already exist in existing databases and via the alternative measurements I linked to earlier. Indeed, I suspect that this kind of work already exists — and if I had more time on my hands today, I already would have found it.

Thoughts?

—————-

*There is an alternative measure that removes journal self-citations, but nobody uses it. [back]

Daniel H. Nexon is a Professor at Georgetown University, with a joint appointment in the Department of Government and the School of Foreign Service. His academic work focuses on international-relations theory, power politics, empires and hegemony, and international order. He has also written on the relationship between popular culture and world politics.

He has held fellowships at Stanford University's Center for International Security and Cooperation and at the Ohio State University's Mershon Center for International Studies. During 2009-2010 he worked in the U.S. Department of Defense as a Council on Foreign Relations International Affairs Fellow. He was the lead editor of International Studies Quarterly from 2014-2018.

He is the author of The Struggle for Power in Early Modern Europe: Religious Conflict, Dynastic Empires, and International Change (Princeton University Press, 2009), which won the International Security Studies Section (ISSS) Best Book Award for 2010, and co-author of Exit from Hegemony: The Unraveling of the American Global Order (Oxford University Press, 2020). His articles have appeared in a lot of places. He is the founder of the The Duck of Minerva, and also blogs at Lawyers, Guns and Money.

great thoughts her

JCR is, IIRC, under “General Social Science,” which is no less weird …. hence my Facebook befuddlement at the fact that J Euro Pub Pol is apparently a top-5 PS journal.

Very thoughtful post Dan. I’m with you in spirit but I can’t help be a bit concerned with the way you implicitly treat citation-counts as superior measure to impact factor. Maybe, maybe not. Tom Friedman has op-eds that get 100+ citations on Google Scholar, despite being … well, not rigorous. Citations count popularity, not quality.

Solving this problem is really tricky, of course. I think there actually is some benefit for using a journal’s reputation as a proxy for quality, even with all of the problems associated with that approach. Absent any other info, I think I would probably trust something published in IO more than something published in the Washington Quarterly, regardless of citation count. Am I wrong to do so?

One is rarely without “any other info”. There’s the identity of the author and, more important, the content of the piece itself. Good articles can appear in little-known outlets and crappy articles sometimes appear in well-known outlets. Thus, neither the impact factor nor the citation count for individual articles means all that much, imo.

The problem is that we always need to specify “for what.” Citation counts for an article do provide a measure of how many times someone felt it necessary, useful, or important to cite something. That’s a poor proxy for quality, and it is a rather blunt for influence — as noted in some of the pieces that I linked to — but it isn’t meaningless as long as we assign it a plausible meaning. The problem here is “metric drift” — the tendency to over-interpret a measure because of the fact of its existence.

My main point is about how we should use citation metrics. Impact factor, if you recall, is simply (for a specified period) the Total Citations to Journal / Number of Articles. So IF is already a citation-count metric. And it is falling apart.

My suggestion is to replace IF, as well as aggregate counting of citations for specific articles, with statistical analysis that focuses on marginal value. So instead of a looking, for example, at a journal’s IF we would measure “impact” by estimating the marginal impact on the number of citations an article receives by appearing in it versus some reference point. IO’s marginal value might be +5 citations/year when compared to the median IR journal, World Politics might be +6, and Journal of Strategic Studies might be -2.

I think your last paragraph makes the point. These days, it is mostly about the paper, not the journal. Sure, some high IF journals have higher circulation and more readers. IF could be used as a measure of “circulation” or “readership” only, not necessarily scientific quality. Then, it could be used as a correction factor.

Given two papers with the same citation rate, one in an elite journal and another one is a regular journal, the one form the modest journal should be deemed to be “better”.