For the past two years, Jon Monten, Jordan Tama, and I have been working with the survey team at the Chicago Council on Global Affairs (Dina Smeltz and Craig Kafura) to revive the leader surveys that the Council used to run alongside their foreign policy opinion surveys of the American public. Because of the expense and the difficulties of getting responses, the Council discontinued those surveys in 2004, leaving academics with really limited options for comparing public and elite attitudes. With the release of a Council report and a recent piece ($, DM me for a PDF) in Foreign Affairs, we are happy to announce the return of the surveys and release of the public and leader data (currently SPSS format but other file types to be uploaded). In light of the Lacour scandal, we wanted to make that data widely available to scholars as soon as possible. I thought I’d use this post to talk about the challenges of reinvigorating those surveys.

The History of Elite Opinion Polls

From the 1970’s through the early 2000s, the Chicago Council surveys were hugely valuable public goods for the study of U.S. foreign policy as well as international relations (ICPSR has all the old surveys, here). In their 2006 book The Foreign Policy Disconnect, Benjamin Page and the Chicago Council’s Marshall Bouton took advantage of the last round of comparable surveys to explore the gap between leaders and the mass public on a range of questions. These surveys have been instrumental in the work of Eugene Wittkopf, Nacos/Shapiro/Isernia, Greg Holyk, Cunningham and Moore, among others. Jon and I looked at the trends in internationalism among both groups in a 2012 piece for Political Science Quarterly. From 1976 to 1996, scholars Ole Holsti and James Rosenau had their own effort to assess leader attitudes, the Foreign Policy Leadership Project.

The surveys allowed scholars to analyze the gaps, to characterize the cohorts and constellations of thought among elites and the mass public, whether elite opinion was driving mass opinion, the major drivers of opinion for both groups, changes over time, and specific questions about attitudes towards the use of force, multilateral engagement, and other topics. After 2004, the Chicago Council’s mass surveys continued but the leader surveys did not, leaving a huge void for scholars interested in ideational currents in U.S. foreign policy. Though other publications like The Atlantic and Foreign Policy have periodically carried out leader surveys, the data and survey methodology are never public, and because question wording and timing are conducted differently than mass public opinion polls, comparisons between groups is problematic. You also never get any demographic data on individual respondents to potentially exploit the correlates of attitudes. Moreover, because these are not carried out on a regular and sustained basis with the same question wording, it is difficult to track changes in opinion over time.

While the Chicago Council surveys did not always ask the same questions in the same way across time and between leader and mass public surveys, it was the best set of data that was available and thus a real shame when costs became prohibitive in carrying them out again. In 2013, the Council on Foreign Relations in conjunction with the Pew Research Center released a poll of CFR members and the mass public (following up an earlier effort in 2009). While this is an important effort, it has its limitations. CFR members (and I am one) may not be representative of wider leader opinion. Its membership may skew towards certain professions (finance, government), regions of the country (the Northeast), or ideological trajectories (middle of the road, pro-free trade internationalism). I don’t believe that data is available for analysis so it’s difficult for scholars to derive their own independent conclusions.

The Revived 2014 Chicago Council Leader Survey

Our survey actually has a couple of components. We sought to replicate the format of the previous Chicago Council surveys which surveyed leaders of different professional groups with an interest in foreign relations, namely academics, business, Congress, executive branch, NGO and interest groups, journalists, labor, and think tank experts. Rather than farm this out to a survey market research firm at the cost of $50,000-$100,000 or higher, we did this ourselves. Many of the names and email addresses were derived from an on-line subscription-based directory called the Leadership Library (LL for short, pages 26-28 of the report describe the selection strategy). For example, to get a list of Executive Branch officials, here is how the report describes the strategy:

Using LL, employees of the Defense Department, Homeland Security Department, or State Department holding the position of deputy assistant secretary, assistant secretary, undersecretary, or deputy secretary, or with the word “senior” in their title were included. Also included were employees of any other federal department with the position of deputy assistant secretary, assistant secretary, undersecretary, or deputy secretary and listed with a job function classified as “international”; members of the White House National Security staff with the position of assistant to the president, special assistant to the president, senior director, or director; and US ambassadors.

Some groups had more email addresses than others. We supplemented Leadership Library in a number of ways. The university list was derived with the help of the folks who conduct the TRIP survey. For some groups, we supplemented lists of potential respondents with other sources, though generally tried to be comprehensive and systematic in our efforts to recruit new subjects. For example, for NGO leaders, in addition to Leadership Library, we went through all the lists of NGOs of a certain budget size that worked on international affairs in Charity Navigator and then sought the leadership emails for them.

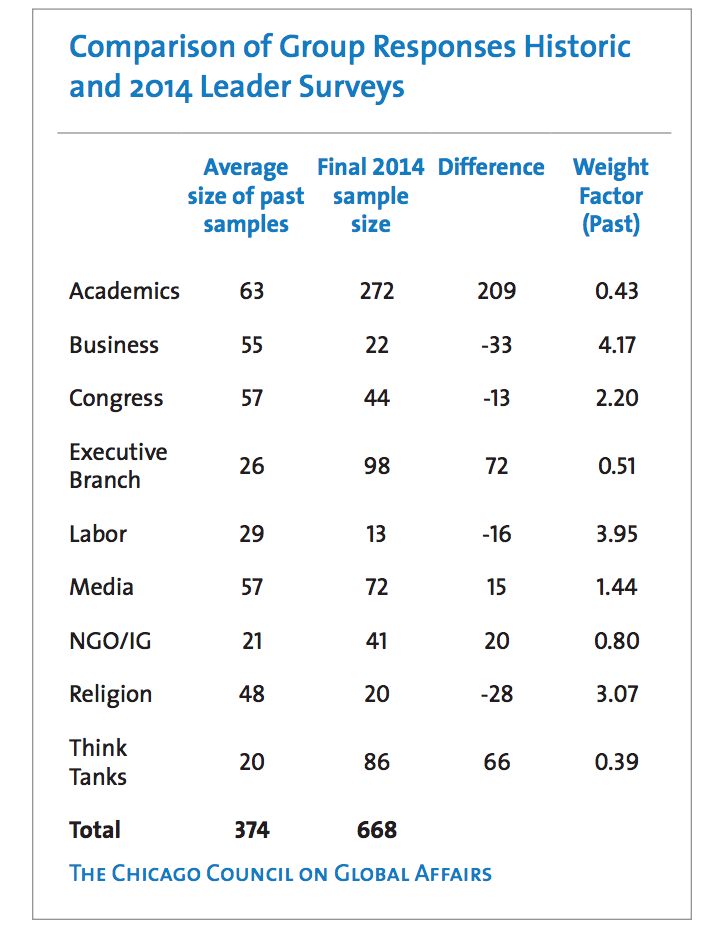

The survey was programmed by Qualtrics and was on-line only, different from the first iterations of the Chicago Council when people got mailed surveys or in-person interviews. In our survey, all of these folks were emailed by Chicago Council President Ivo Daalder in May with follow-up efforts to low response rate groups in July. All told, we received 668 responses from these groups, with some groups like academics over-represented in the survey and other groups such as business, labor, and religious groups under-represented. See the figure below.

In addition, we recruited a sample of 517 alumni of the National War College. These respondents tended to be considerably older, more likely to be white, and more conservative than other cohorts. We consider them to be a military sample but since previous surveys did not include this group, we didn’t analyze them formally in the report we recently released. That group may be representative of wider thought among military types, if not active duty, but it skews very old. 48% is between 60-74 while almost 25% are over 75. This data is included in the dataset, and we encourage other people to make use of it. We may ultimately write a follow-on report based on this data, but rather than sit on it, we wanted to put it out there.

Challenges of Aggregation

The challenge is then deciding how to aggregate these survey responses to create a weighted average for “elites.” If we were to treat all responses equally, then academic voices would unduly be reflected in overall “elite” averages. Since no comprehensive or exhaustive list of elites exists, there are a couple of options. One is to equally weight each group. Another is to use past average response number from previous surveys and weight accordingly. This is what we did in the report, though we did some sensitivity tests with equal weighting. You may have other ideas about appropriate weighting strategies.

Challenges of Completeness

Low response rates from business, labor, religious, and Congress groups was unfortunate. In previous elite surveys we conducted with samples of convenience, we relied on multiple interlocutors to reach out to elites on our behalf. For example, in a survey of former Executive Branch officials that was reported in Foreign Affairs, we had several Democratic think tank interlocutors reach out to their network and Republicans reach out to their networks. We ended up with about a 40% response rate on that survey which had about 100 subjects. Using Leadership Library, we did something similar with Congressional staff and ended up with a similar final total but with about a 10% response rate. Looking ahead, we may need supplementary interlocutors and other strategies to boost response rates from particular groups.

One issue makes the Chicago Council historic survey design somewhat problematic in general. The party in power should have more people occupying the Executive Branch during a given survey iteration. Former Executive Branch folks will only be captured by our survey if they cycled out of government in to other professional life such as business, academia, think tanks, or other professional groups. The business list from Leadership Library may not pick up on folks like Henry Kissinger who have their own consultancies. Another approach is the one taken by Michael Desch and Paul Avey who in their ISQ piece went through and identified all of the senior government officials who had served in previous administrations in national security-oriented agencies. We were consultation with them about their survey but elected to employ the previous Chicago Council approach for the 2014 survey to be comparable to the past. Their study was more narrowly focused on national security elites so would have missed folks at other agencies like Treasury or EPA who worked on international affairs but not strictly in the national security field.

Looking Ahead

Another iteration of the mass survey will be out later this year. We hope to have another iteration of the leader survey (and a public survey) out in 2016 before the presidential election. While space is at a premium, we welcome ideas about questions or areas that we didn’t include in the survey. Unlike the TRIP Snap Polls which aim for gauging the opinions of academics for topical issues, the Chicago Council surveys tend to capture more long-running attitudes and processes about the use of force, multilateral engagement, and the cooperative elements of internationalism like foreign aid. Some of the questions get at topical issues (the rise of ISIS, Russia in Ukraine), but the persistent value of the surveys is their ability to gauge public and leader opinion about recurrent issues in foreign policy. At least, that’s our bet. The surveys should pick up on some emergent properties and issues. It would have been nonsensical to not ask more questions about terrorism after 9/11. Other issues like climate change were not relevant in 1979 so we the surveys should have a mix of continuity and dynamism to reflect the times.

In the 2014, we incorporated an experimental design in to the leader and public survey about the use of force. We have an APSA paper and journal article in process on that experiment, which randomized whether or not a multilateral endorser was supporting a variety of use of force scenarios. There are some challenges of the experimental design we are working through, but the data are up included, so please get in touch if this is an area of interest to you. We also look forward to ideas about how to improve the experimental design going forward and other opportunities for seeing how survey language might be manipulated to test certain arguments about international relations, foreign policy, and opinion.

Length is always an issue with elite surveys, but we hope that we’ll have fine-tuned the approach in 2016 with a sharper survey and a higher response rate from various groups. We hope you’ll use the data and let us know how to make the survey better.

Joshua Busby is a Professor in the LBJ School of Public Affairs at the University of Texas-Austin. From 2021-2023, he served as a Senior Advisor for Climate at the U.S. Department of Defense. His most recent book is States and Nature: The Effects of Climate Change on Security (Cambridge, 2023). He is also the author of Moral Movements and Foreign Policy (Cambridge, 2010) and the co-author, with Ethan Kapstein, of AIDS Drugs for All: Social Movements and Market Transformations (Cambridge, 2013). His main research interests include transnational advocacy and social movements, international security and climate change, global public health and HIV/ AIDS, energy and environmental policy, and U.S. foreign policy.

0 Comments

Trackbacks/Pingbacks