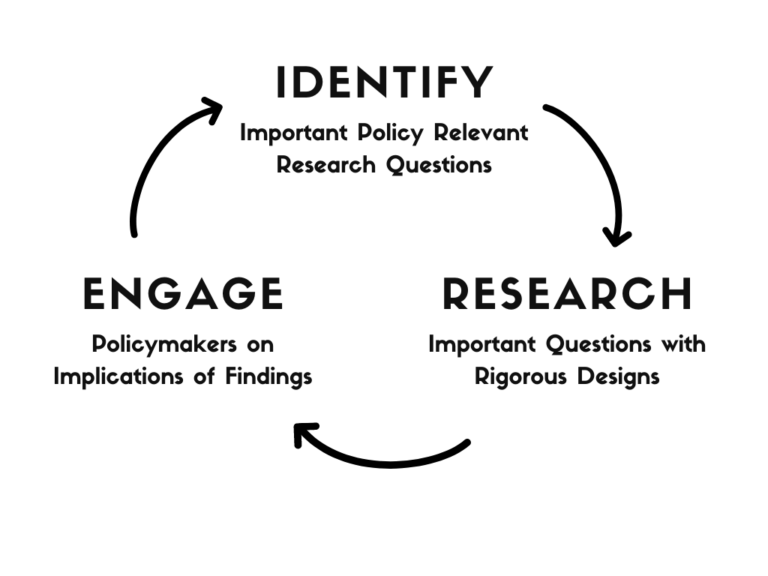

There is an increasing focus in academic and policy circles on research-policy partnerships. These partnerships are often achieved through co-creation, or “the joint production of innovation between combinations of industry, research, government and civil society.”...